In its essence, Confidential Computing is designed to protect and encrypt data while it is being processed. With the help of Trusted Execution Environments (TEEs) and verification processes like cryptographic attestation, your data stays safe and private from the cloud hosting provider, server administrators and even the hypervisor itself.

With the importance of privacy in today’s digital world, it is no surprise that this secure processing model is slowly starting to appear in, and transform, global industries like healthcare and finance that require strict data governance, making it the stepping stone to zero-trust cloud architectures and secure computing.

In this article we are going to focus on the ideas behind this advanced security framework, its principles, history and implementations as well as its unrivaled benefits alongside some of the most popular use cases and industries where it thrives. Join us as we take a deeper look into the field of Confidential Computing!

What Is Confidential Computing?

Confidential Computing is a security paradigm that is designed to help protect data while it’s processed.

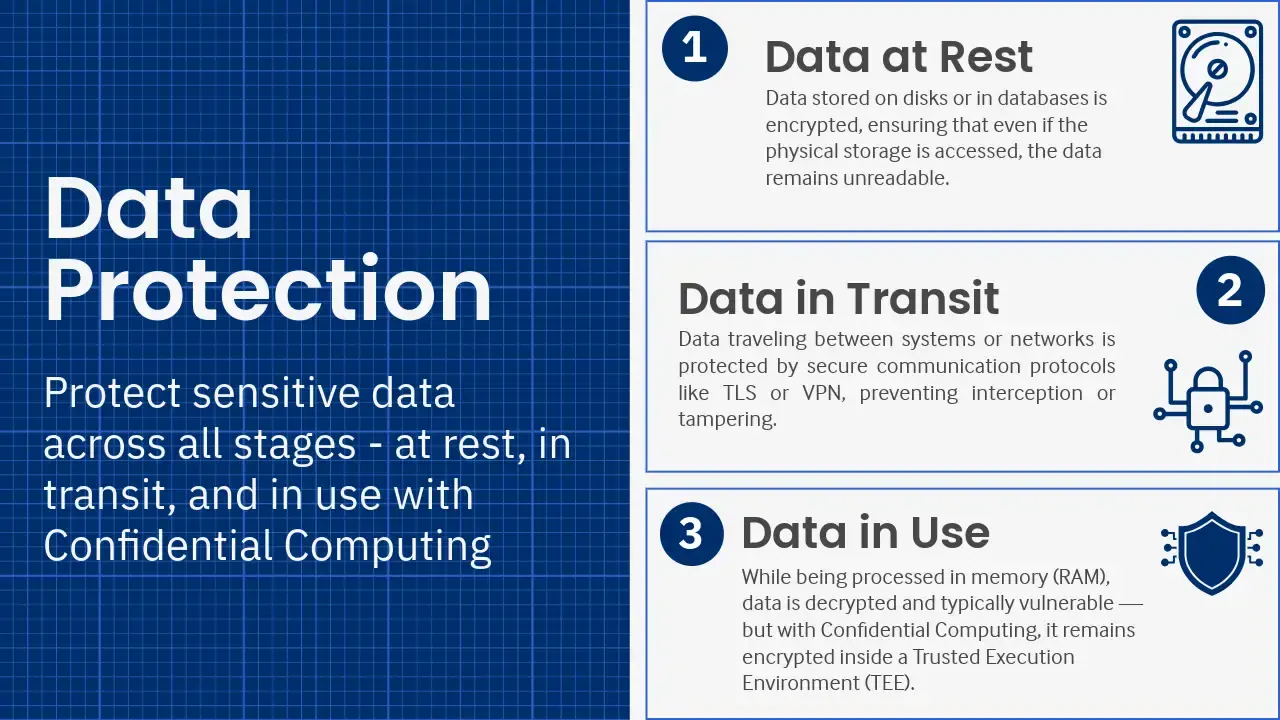

In general, data protection boils down to 1 of 2 states - data at rest and data in transit. While data is at rest, it is secured with the help of either disk or database encryption, meaning that if it were to be accessed in either medium, it would remain encrypted, preventing any data leaks or potential tampering.

The same applies to data when it is in transit, where it is usually protected by secure communication protocols like TLS or a VPN, which ensures that even if the current channel is hijacked, data wouldn’t be able to be decrypted.

While these methods ultimately help ensure data security, there is one more action that needs to be secured and that is when data is loaded into memory, more specifically into the active memory (RAM).

While it’s being processed by an application or a virtual machine, data needs to be decrypted, leaving it exposed to the operating system, the hypervisor and even system administrators. This ultimately creates a giant hole in data security, leaving the door open for insider threats, memory scraping and other types of exploits at a software and at a hardware level.

This is where Confidential Computing comes into play, ensuring that sensitive data remains encrypted and isolated during processing with the help of hardware-located Trusted Execution Environments (TEEs) where data processing executions can be done securely without the risk of outside interference.

Why the Sudden Need for Data Protection?

Over the last couple of decades, the field of computing has undergone a substantial amount of changes, including the switch from on-premise servers to virtual machines and multi-tenant cloud platforms.

It’s a fact that virtual machines and cloud hosting offer unmatched scalability and versatility for those needing reliable hosting infrastructure, however, many issues about data privacy and trust have started to circulate and to become more prominent, being further fueled by the increasing number of insider threats and persistent attacks over the last couple of years.

These data vulnerabilities were also swiftly picked up by regulatory frameworks such as the GDPR and the HIPAA, which require even more robust security by companies and governments when it comes to protecting sensitive information, ultimately promoting the need for a new data security framework in the face of Confidential Computing.

Brief History of Confidential Computing

The ideas behind the paradigm can be seen in early academic work, which focuses on trusted computing in hardware security models (HSMs) all the way back in the late 1990s. However, the concept back then was just that - a concept, with secure data-handling architecture slowly evolving, finally seeing its first practical implementation over just 10 years ago.

At the start of this decade, the efforts to create a secure data processing environment were combined under the Confidential Computing Consortium (CCC), which is a Linux Foundation project that aims to unite hardware vendors, hosting providers and developers to create open standards and frameworks using trusted execution and data encryption.

How Does Confidential Computing Work? TEEs & Enclaves

As we already mentioned, this paradigm aims to protect data while it’s being processed and it does this by encrypting and isolating data within Trusted Execution Environments that allow for private data processing, offering verifiable data integrity with the help of a process called attestation.

A TEE is a secure, isolated place within the processor that not only protects the code but also the data that is loaded within it. This ensures that unauthorized access is impossible and that no modifications to the data can happen while it’s being processed.

The TEE is also completely separated from the main operating system or the hypervisor, meaning that even software that has administrator privileges cannot access the memory. This ensures that only verified, trusted code can be executed inside of the TEE, which further prevents some common memory attacks like injections as well as any data tampering.

The actual computation that happens inside of the TEE is thanks to the power of the enclaves. These are regions of memory that are encrypted and are only accessible to the code that is running within them. They act as little vaults that decrypt the data that arrives, process it and then encrypt it again before passing it forward, ensuring that everything stays secure from the operating system or the hypervisor in the case of virtual machines.

Manual Integrity Checks With Attestation

One main principle of Confidential Computing is the ability to verify that a TEE is not compromised, by allowing the user to run trusted code before sensitive data is shared with it, enabling manual data integrity and security verification to ensure that nothing has been tampered with.

There are 2 forms of attestation, local and remote. With local attestation, enclaves verify one another. Remote attestation is used between external systems and the enclave to ensure that everything is running in a secure and trusted state.

Regardless of the attestation form, during the process, a cryptographic report is generated which gives more information about the enclave including its identity, software version and measurement hash. This report is then verified by the requesting party to guarantee that data will be processed in a secure environment and that everything is as it should be.

Key Management and Hardware Root of Trust

Confidential Computing utilizes hardware-based key management to safeguard all encryption keys that are used within TEEs. They are generated inside of the CPU and are never shared with the system memory or any other software layers.

Additionally, each TEE is tied to a hardware Root of Trust, which is an immutable security component inside of the processor that is responsible for secure system booting as well as cryptographic verification. This Root of Trust ensures that even the earliest code, during the boot, is also validated before executing, further guaranteeing data security and preventing all types of possible attacks.

Common Threats and Attacks

Speaking of threats and attacks, here are some of the most popular ones and how Confidential Computing mitigates them.

| Threat Type | Vulnerability | Solution |

| Insider threats | Cloud or system administrators can inspect and access memory or runtime data. | Data is isolated and encrypted in TEEs, inaccessible even to system administrators. |

| OS/hypervisor compromise | A compromised kernel or hypervisor can expose VM or container memory. | TEEs prevent ALL access to enclave memory. |

| Memory scraping / cold boot | Attackers extract data from DRAM or swap. Runtime data is also decrypted and accessible to malware. | Memory is encrypted by the CPU; data is unreadable outside TEEs. |

| Physical attacks | Hardware tampering or bus probing. | Hardware encryption and secure boot detect and prevent any manipulation. |

| Software tampering | Injection of untrusted code or libraries. | Attestation ensures only verified code runs inside the enclave. |

| Data theft in multi-tenant clouds | Shared infrastructure exposes tenants to cross-VM attacks. | Enclave-based isolation guarantees tenant separation at the hardware level. |

As it can be seen, some of the biggest privacy concerns can be solved by encrypting memory while it’s being processed, rendering a vast majority of common threats and attacks useless, ensuring data security in the process.

Confidential Computing Technologies - Intel SGX & AMD SEV

Now that we’ve discussed Confidential Computing as a paradigm, let’s take a look into how it’s actually put into practice. In general, there are 2 very prominent architectural approaches that can help secure data while it’s being processed - Intel SGX and AMD SEV.

Intel SGX

Software Guard Extensions (SGX) is Intel’s leap towards Confidential Computing. With SGX, enclaves are protected in a memory region called the Enclave Page Cache (EPC), which ensures that data stays encrypted and verified. Only authorized code can access this data, while unauthorized code automatically triggers exceptions, resulting in better security and complete encapsulation. Data can also be verified through remote attestation which uses cryptographic quotes verified by Intel’s Attestation Service.

AMD SEV

Secure Encrypted Virtualization (SEV), on the other hand, is AMD’s implementation of Confidential Computing. It’s also hardware-based, but it instead aims to protect virtual machines by encrypting their memory and ensuring that no one and nothing can get access to the data hosted on the virtual machine, including the hosting provider and even a faulty hypervisor.

Introduced back in 2016, it utilizes encryption keys, assigning a unique key to each virtual machine. With its constant evolution, now reaching SEV-SNP, SEV offers extremely high levels of data protection, even managing to prevent leaks of data from registers to components like the hypervisor. SEV-SNP also ensures that if a virtual machine can read a private page from memory, then it must always retrieve the last value written to that location. This means that if it can’t then there could be potential memory tampering, which raises an exception. Similarly to SGX, cryptographic attestation is also the way to go with SEV, allowing the user to verify the integrity of the data.

The following comparison table takes a closer look into both, highlighting their similarities, differences, benefits and drawbacks.

| Principle | Intel SGX | AMD SEV-SNP* |

| Primary isolation unit | CPU enclave (region inside an application’s address space). Enclave code + data isolated from host OS/hypervisor. | Virtual Machine (VM) level - whole-VM memory encrypted with per-VM keys. SEV-ES protects CPU register state; SEV-SNP adds nested paging/integrity & attestation. |

| Typical use cases | Confidential functions, DRM, secrets handling, confidential modules, enclaves for secure ML primitives, MPC helper functions. | Confidential VMs, multi-tenant cloud workload isolation, confidential containers, good for “no-code change” VM protection. |

| Isolation granularity | Fine-grained (per-enclave, per-process). | Coarse (whole VM). |

| Memory encryption scope | Enclave pages encrypted in processor memory (EPC) and protected by CPU keys. EPC size is historically limited (128 MB typical on many platforms) but can vary by CPU family; Linux supports paging to backstore (with performance cost). Newer Xeon families list larger per-CPU EPC capacities. | Whole-VM guest memory encrypted by memory controller with per-VM keys. SEV-SNP adds protections that bind memory mappings/integrity to guest owners. |

| Attestation model | Remote attestation via quoting: EPID (older; Intel IAS), and DCAP/ECDSA (data-center model) allowing cloud operators / enterprises to host attestation services. Quote generation uses QEs and PCE. | SEV-SNP includes an attestation mechanism that produces reports the guest owner can verify, SNP is the stronger attestation generation model. |

| Key management / Root of trust | Rooted in CPU firmware and microcode (Root of Trust). Keys used for quoting and sealing are generated inside SGX/QE/PCE hardware; DCAP allows ECDSA-based models for data center attestation. | Keys managed by AMD Secure Processor (firmware); per-VM keys never exposed to the hypervisor. SEV-SNP enforces stronger measurement and anti-rollback semantics in keys and guest-launch flow. |

| Code & runtime visibility (host/admin) | Host OS / hypervisor cannot read enclave memory. Host still controls scheduling and I/O; enclave must explicitly marshal I/O to host. | Hypervisor cannot read encrypted guest memory. SEV-SNP protects nested paging integrity. |

| Typical performance & overhead | Low overhead for enclave compute; overhead appears when crossing enclave boundaries or paging EPC (page swapping costs). EPC paging can impose noticeable overhead for memory-heavy workloads. Actual numbers are workload dependent. | Minimal runtime overhead for plain memory encryption; small overheads for cryptographic key ops and SNP attestation. I/O and hypervisor interactions still have normal VM costs. |

| Memory limits / constraints | Historically constrained by EPC size (often 128 MB typical on many platforms; newer families and multi-socket systems support larger EPCs; Linux supports EPC paging but with overhead). Enclave size planning is critical. | No per-VM EPC-like small-buffer constraint, whole VM memory is encrypted; practical limits are those of normal VM sizing, not enclave EPC. |

| Ease of migration / operational model | Requires application rework to split secrets/logic into enclaves; more developer effort and lifecycle changes (CI/CD, attestation). | Low friction for existing VMs, many workloads gain encryption without code changes; operational model similar to existing VM operations, plus attestation workflows |

| Best-fit scenarios | Small, high-value workloads where fine-grained isolation and attestation of application code matters (secret stores, small ML kernels, DRM, MPC helper functions). | Whole-VM confidentiality needs, cloud with minimal app changes, multi-tenant isolation at VM level. Good for enterprise VMs and confidential containers when supported. |

| Maturity & standardization | Mature SDKs and production deployments; industry ecosystems (Open Enclave, Gramine). Ongoing evolution (attestation models moved from EPID → DCAP/ECDSA). | Rapidly matured with SEV-SNP; adopted by hyperscalers for confidential VM products standardization efforts via CCC and ecosystem projects. |

Use Cases

Due to the numerous benefits of Confidential Computing, its usage is becoming more and more widespread across some of the most popular industries on the planet. With the ever increasing demand for privacy and data regulation, it’s slowly becoming the go-to standard in many different areas, where sensitive data is used.

One prominent example is of course, the financial industry. With an abundance of sensitive data that needs strict regulation and protection, banks, insurers and fintech providers are now turning towards this security paradigm to safely process and analyze data without risking it being exposed to other parties or their hosting provider.

It also allows for confidential smart contracts to happen with the help of blockchain nodes that run within TEEs, which can then verify and execute these contracts, while keeping information undisclosed.

Another prominent example is healthcare as it is one of the most protected industries globally with very strict data regulation.

AI and machine learning is also a very good use case that can benefit significantly from Confidential Computing. This is because this process usually involves collecting, storing and utilizing sensitive datasets with personal information. The confidentiality ensures that the data that is used for machine learning stays safe.

Other areas where it can be quite useful are telecommunications, manufacturing, industrial operations and any others that need to protect information.

In general, this security approach largely ensures that data regulatory demands are met, which is why it is a go-to standard for all of these industries.

| Regulation | How Requirements Are Met |

| GDPR (EU) | Ensures that personal data remains confidential and under control, even when processed in the cloud; supports privacy by design and by default. |

| HIPAA (US Healthcare) | Protects patient data during processing and supports secure data sharing and auditability. |

| PCI DSS (Payments) | Provides hardware-backed encryption and attestation to secure financial transactions. |

| ISO/IEC 27001 | Enhances data isolation and control measures for compliance certification. |

| SOC 2 / SOC 3 | Provides evidence of data confidentiality controls through attestation logs. |

However, there are also some newly emerging use cases where Confidential Computing can play a pivotal role, including:

| Emerging Domain | Opportunity |

| Confidential AI & Federated Learning | Secure training and inference where data and models remain encrypted; essential for cross-enterprise AI. |

| Confidential Data Marketplaces | Data owners can sell or share data insights without revealing raw data, using TEE-verified computations. |

| Blockchain and Web3 | Off-chain smart-contract execution in TEEs ensures confidentiality and verifiable trust for decentralized applications. |

| Zero Trust Architecture Integration | TEEs anchor zero-trust policies by enforcing that each workload is attested and verified before communication. |

| Edge and IoT Security | Hardware-rooted trust extends from cloud to edge devices, enabling verifiable device identity and secure MEC applications. |

Overall, Confidential Computing offers numerous opportunities for all sectors, especially for those that need to handle sensitive information and that need to meet data security regulations. Its ability to encrypt data while it’s being processed solves an issue that was unanswered for decades, ultimately completing the circle of data usage. Due to the fact that secure data processing can now be manually verified with the help of cryptographic attestation, Confidential Computing becomes the new standard for data.